What is Robots Txt file? How to Generate Robots Txt?

Your Robots.txt file is what tells the search engines which pages to access and index on your website on which pages not to. For example, if you specify in your Robots.txt file that you don’t want the search engines to be able to access your thank you page, that page won’t be able to show up in the search results and web users won’t be able to find it. Keeping the search engines from accessing certain pages on your site is essential for both the privacy of your site and for your SEO.

How Robots.txt Work

Search engines send out tiny programs called “spiders” or “robots” to search your site and bring information back to the search engines so that the pages of your site can be indexed in the search results and found by web users. Your Robots.txt file instructs these programs not to search pages on your site which you designate using a “disallow” command. For example, the following Robots.txt commands:

User-agent: *

Disallow: /thankyou

…would block all search engine robots from visiting the following page on your website:

Notice that before the disallow command, you have the command:

User-agent: *

The “User-agent:” part specifies which robot you want to block and could also read as follows:

User-agent: Googlebot

This command would only block the Google robots, while other robots would still have access to the page:

However, by using the “*” character, you’re specifying that the commands below it refer to all robots. Your robots.txt file would be located in the main directory of your site. For example:

http://www.yoursite.com/robots.txt

Why Some Pages Need to Be Blocked

There are three reasons why you might want to block a page using the Robots.txt file. First, if you have a page on your site which is a duplicate of another page, you don’t want the robots to index it because that would result in duplicate content which can hurt your SEO. The second reason is if you have a page on your site which you don’t want users to be able to access unless they take a specific action. For example, if you have a thank you page where users get access to specific information because of the fact that they gave you their email address, you probably don’t want people being able to find that page by doing a Google search. The other time that you’ll want to block pages or files is when you want to protect private files in your site such as your cgi-bin and keep your bandwidth from being used up because of the robots indexing your image files:

User-agent: *

Disallow: /images/

Disallow: /cgi-bin/

In all of these cases, you’ll need to include a command in your Robots.txt file that tells the search engine spiders not to access that page, not to index it in search results and not to send visitors to it. Let’s look at how you can create a Robots.txt file that will make this possible.

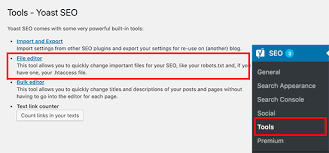

Creating Your Robots.txt File

By setting up a free Google Webmaster Tools account, you can easily create a Robots.txt file by selecting the “crawler access” option under the “site configuration” option on the menu bar.

Installing Your Robots.txt File

Once you have your Robots.txt file, you can upload it to the main (www) directory in the CNC area of your website. You can do this using an FTP program like Filezilla. The other option is to hire a web programmer to create and to install your robots.txt file by letting him know which pages you want to have blocked. If you choose this option, a good web programmer can complete the job in less than one hour.

Conclusion

It’s important to update your Robots.txt file if you add pages, files or directories to your site that you don’t wish to be indexed by the search engines or accessed by web users. This will ensure the security of your website and the best possible results with your search engine optimization.

4 Comments

This is the 4th website i tried. And finally, something that I can understand

Appreciate it

The working with files using such a system will be much simpler and faster. This method is very effective in terms of search engine optimization

Thanks